Super Performance

TL;DR: We lack a shared, rigorous way to assess an entire organization – most tools either miss key drivers or apply only to specific domains. By meta-analyzing 102 criteria from 14 seminal sources, from Rams’ Design Principles to the Agile Manifesto to Jane Jacobs' Generators of Diversity, this post distills a seven-factor framework (Purposeful, Fit, Vital, Powerful, Fair & Just, Connected, Safe) that you can use to benchmark any company’s systemic health and surface the levers that actually create sustained performance.

How do we know when an entire organization is performing well – not just its people or its teams, but the system as a whole? We have spreadsheets full of individual KPIs, agile dashboards that chart team velocity, 9‑box grids for talent. Yet when we try to define what “organizational effectiveness” looks like at the company, division, or functional level, you get one of two things: a hand‑wavey answer, or an exclusively business-defined set of metrics that average out to contribution margin.

What tools do we have today? One pretty good example is B Corp certification: it’s a valuable badge for what I would consider organizational responsibility, but its scoring rubric doesn't adequately weight things like product-market fit, customer connection, and community energy. A company can ace the B Corp audit while still being slow and siloed. Another alternative is to look at baskets of measures focused on the employee experience. Measures of diversity, equity and inclusion are tremendously important. So is employee engagement. So are predictive measures of attrition, a data-driven understanding of manager quality, and a deep-dive on organizational network connectedness (using ONA to measure collaboration distance, spotlight silos, and reveal overloaded hubs).

I'd argue that these are not enough, and I hope you're nodding along.

I would also argue that an effort to distinguish "traditional organizations" from "next-gen" or "responsive" organizations is not serving our movement, nor are these definitions even really true, since firms are anything but monolithic. My experience so far is that this is actually not an issue of a separate operating model that some companies have adopted, but rather that there are performance gains on the table for every organization if the humans in that organization collectively decide to do something about it. That in mind, can I convince you to pre-order my book?

Hidden Patterns

A book about fixing the invisible stuff that makes work suck. Not the obvious stuff like bad bosses or dumb policies, but the deeper patterns that slow teams down, kill good ideas, and waste everyone's time.

It gives you small moves that actually help work get better, no matter where you sit in the org.

Craven self-promotion complete! Back to the content.

So when it comes to designed human systems what counts as performance? Put another way, what are the things we’re trying to maximize entirely, even at the cost of other things?

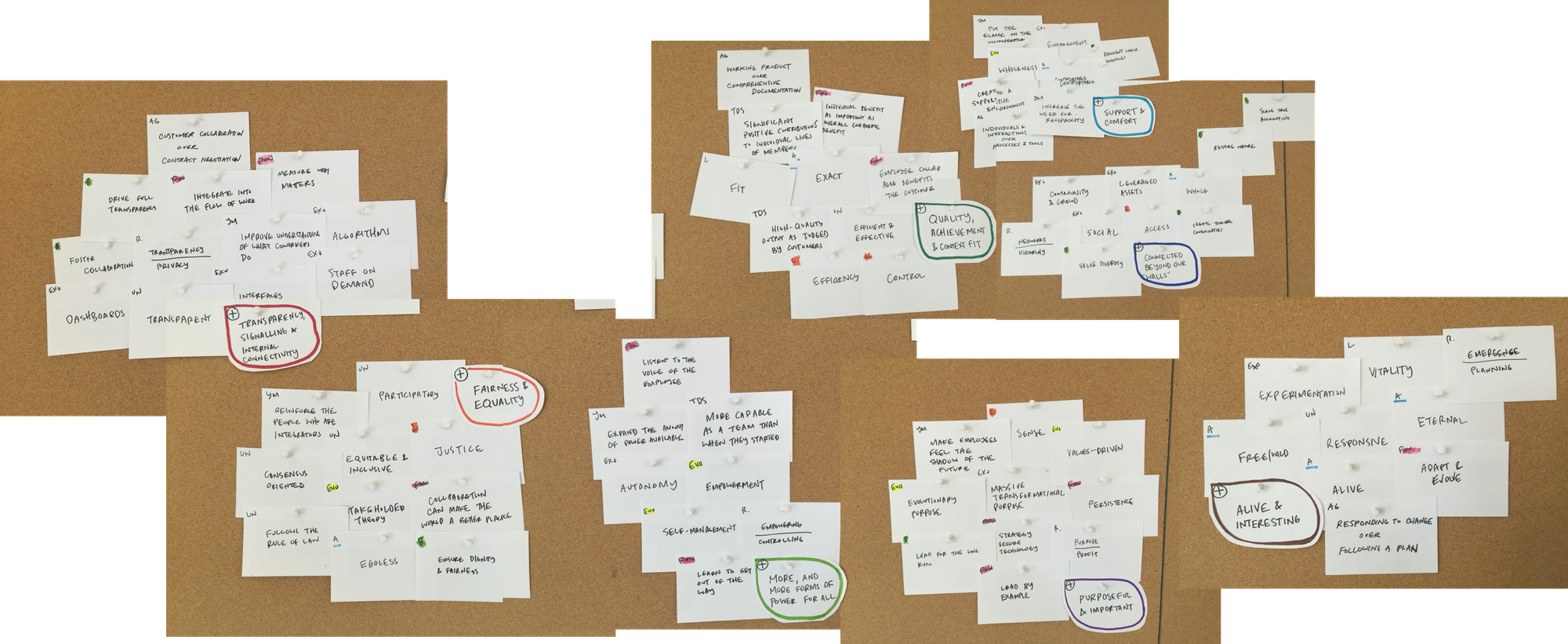

A meta analysis is a handy tool here, since loads of really smart folks – sometimes individuals, sometimes groups – have given the world lists of performance criteria that can guide the conversation. I gathered a bunch of these lists, put each individual item on cards, and with the help of a few colleagues, put them into groups. I sourced 102 criteria in total, distilled from fourteen seminal references that span industrial design, urbanism, organizational science, and global governance. The list pulls equally from evergreen classics and contemporary fieldwork: Dieter Rams’ Ten Principles of Good Design; the original Agile Manifesto; Christopher Alexander’s Quality Without a Name; Salim Ismail’s growth blueprint Exponential Organizations; Jacob Morgan’s Future of Work; the worker-centric approach of Zeynep Ton’s Good Jobs Institute; Jane Jacobs’ urban vitality lessons from The Death and Life of Great American Cities; Kevin Lynch’s criteria on good city form in The Image of the City; Frederic Laloux’s teal and green development stages in Reinventing Organizations; the peer-to-peer ethos of the Responsive Org movement; the sustainability-forward leadership agenda of The B Team; UN guidelines on good governance and public administration; the high-performance team research of Wageman, Hackman & Lehman; and Yves Morieux’s Six Simple Rules. If you have some additional sources, send them my way!

Here they are, mapped from the individual examples on the outside to the seven performance criteria on the inside. Super-performance systems are purposeful, fit, vital, powerful, fair & just, connected, and safe. In each case, more is better. In the chart below, you can narrow the sources down with the dropdown, and read some of the background narrative from each source by hovering.

Purposeful

A purposeful organization knows exactly why it exists and makes that reason visible in day-to-day choices. People can repeat it in plain language, customers can feel it in the product, and partners see it in every negotiation. When purpose is this clear, alignment comes free: teams waste less time arguing over priorities because the destination is already agreed. Many of the source texts use the word purpose specifically, but I also count Jane Jacobs' Density and and Dieter Rams' Good design is honest as examples of purposeful criteria.

It's the world's most obvious example, but it's a good one. Patagonia’s purpose – protect our home planet – shows up everywhere: lifetime repairs, the “1% for the Planet” pledge, and a marketing budget that doubles as environmental activism. The purpose acts as a north-star filter on growth bets and cost cuts. (Link)

Further learning

- Years later, I still feel like Jim Collins captured the practical part of setting vision here (PDF) in his Vision Framework. I prefer "purpose" to "vision" or "mission" but I'm not going to fight you on it. Heck, sometimes I end up using both in a text if I'm trying not to repeat myself.

- Ikigai is another tool that works especially well for people, but also for organizations (and even for helping determine what a team should build next!).

Fit

An organization that scores high on Fitness delivers work that meets two conditions: quality you can measure and relevance you can feel. Users adopt the product without hand-holding, and teammates see/eliminate waste before anyone points it out. Fit turns continuous improvement into a reflex rather than a slogan. Christopher Alexander's Exact, Zeynep Ton's Achievement, and High Quality Output as Judged by Customers from Wageman, Hackman and Lehman are standouts here.

Figma launched multiplayer editing when most design tools were still single-player. The quality bar (speed, no version conflicts) was obvious, but its relevance came from solving a coordination headache designers had simply tolerated. Fitness showed up in usage: teams that tried multiplayer kept it on, and paying seats expanded inside each customer without a sales push. Orgs can display fitness, but so can (and must!) teams, people, products, features, etc. And fitness is always contextual and changing.

Further learning

- Inspired by Marty Cagan

- How Miro Builds Product with Varun Parmar on Lenny's Newsletter

- Gibson Biddle’s Product Strategy

Vital

Vitality shows up when people leave work with more spark than they arrived. You sense it in the pace of Slack threads, the buzz around demos, the quick uptake of a colleague’s side project. High-vitality teams ship, observe, learn, and fold lessons back into the next cycle without ceremony. Vital organizations are alive, their culture is contagious and probably worth marketing to the outside world. They are interesting. I'm especially compelled by the language Exponential Orgs uses around Engagement here.

Basecamp’s “Shape Up” rhythm bakes vitality into the calendar. Every six-week build cycle is followed by a two-week cool-down where engineers chase curiosities, kill nagging bugs, or prototype wild ideas. Because exploration time is guaranteed, energy stays high through the build window; people know they’ll soon get space to tinker and recharge. The practice keeps release velocity steady while preventing burnout.

Further learning

- Alive at Work by Daniel Cable

- WorkLife with Adam Grant

- Julie Zhou's The Looking Glass

Powerful

A powerful organization lets the people closest to the problem decide how to solve it. Authority flows outward and upward, so teams can move fast without begging for sign-offs. Disciplined empowerment backed by tight feedback loops and crystal-clear guardrails gives execs, leaders, managers, individual contributors – that is, everyone! – better control over the organization’s future. This idea comes straight from Yves Morieux's Expand the amount of power available, and is the least common theme among existing frameworks, but IMO one of the most essential to high performance and is only starting to be deeply understood by managers.

Haier’s “Rendanheyi” model turned a 90,000-person appliance giant into thousands of self-managed micro-enterprises. A product team that spots a market gap can form a contract with manufacturing, spin up a P&L, and launch in months – no executive committee needed. Because revenue shares and career growth hinge on the team’s own results, their empowerment is very real.

Further learning

- Humanocracy by Gary Hamel & Michele Zanini

- The Bayer DSO Case from HBS

- Hear it direct from Bill Anderson on At Work with The Ready

Fair & Just

A Fair & Just organization uses human dignity as performance infrastructure. Pay, feedback, promotions, and everyday courtesies flow without bias – no extra favors for title, tenure, or who you had lunch with. Policies are transparent and applied the same Tuesday morning as they are Friday at 5 p.m. When people trust the rules, they spend their energy on the work, not on second-guessing whether or not they can tell each other the truth. This one is pretty straightforward and mentioned pretty directly in quite a few of the sources.

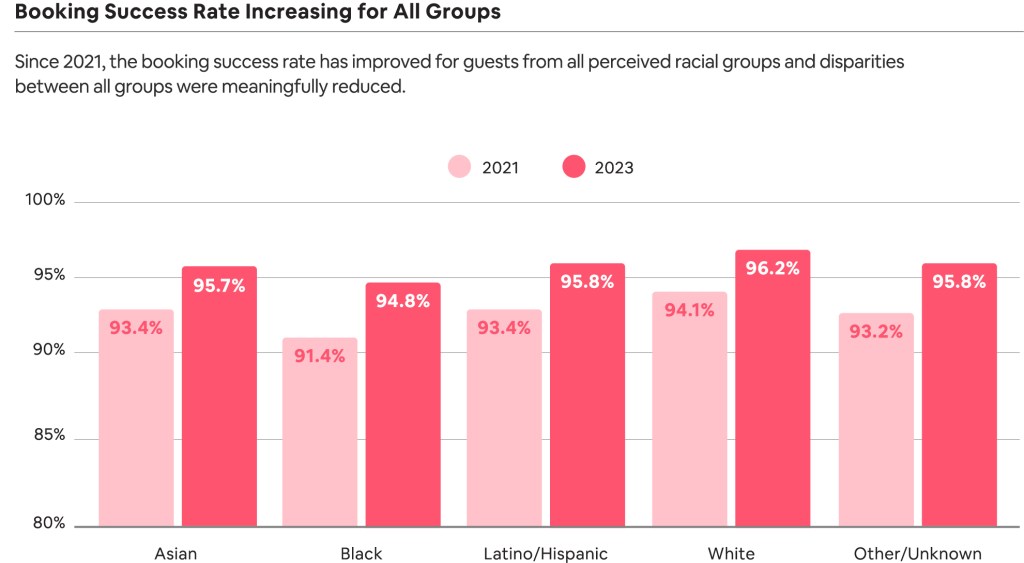

This can and should extend to the product, too! Airbnb’s Project Lighthouse uses privacy-safe data to detect racial gaps in booking approvals; product tweaks since 2020 have cut the Black-vs-white booking-success gap nearly in half, with every group now above a 94 % success rate.

Further learning

- Kim Scott's books here are indispensable

- Some nice stuff in Increment by Lara Hogan

- Great research from Project Include

- Stop. Breathe. We can't keep working like this. (The Ezra Klein Show)

Connected

A Connected organization treats information like a renewable resource rather than something worth hoarding: teams keep internal work publicly visible; customers and partners are invited to critique early versions; insights flow both directions. When something breaks, the story spreads faster than the fix, so everyone patches similar faults before they hurt users. An important theme in the research is that a super-performance organization should be connected inside and outside. This concept is by far the most supported in the source material, but probably the most challenging for the companies I've worked with.

GitLab runs its entire OS – road-maps, policies, even salary formulas – off a 2,000-page public handbook. Issues, merge requests, and post-mortems default to “public,” so customers and strangers can spot gaps, file fixes, and learn alongside internal teams. That openness makes information flow both ways: engineers see real-time feedback from the dev community, while outsiders reuse GitLab’s processes verbatim, creating a virtuous cycle of improvement.

Further learning

- Team of Teams by Stanley McChrystal

- Brie Wolfson's The Kool-Aid Factory

- Gergely Orosz’s newsletter The Pragmatic Engineer

Safe

A team performs best when nobody is bracing for backlash. Safe means workloads are humane, tools are reliable, and people can dissent, surface mistakes, or ask for help without risking status or livelihood. Candor becomes a default, so risks and weak signals show up early, long before they snowball into crises. A standout piece of inspo for this one from the source material is Yves Morieux's Increase the need for reciprocity.

Pixar’s “Braintrust” sessions invite directors to screen rough cuts to a room of peers who offer unfiltered critique. Directors are free to accept or ignore any suggestion, which keeps feedback sharp while preserving creative ownership. Because reputations aren’t threatened by early missteps, teams expose half-finished ideas sooner and fix flaws before animation costs spike. The studio’s hit rate – 23 Oscars and counting – shows how safety underwrites creativity and quality simultaneously. I don't want to hear about Elio in the comments but, companies are always rising and falling, yanno?

Further learning

- All of Amy's books

- Google's Project Aristotle

- Psychsafety.com is pretty indispensable; subscribe to their weekly newsletter

Super Performance Org Assessment

So, what do we do with this? I would offer that we could start using a new survey, combine that with a battery of observable data points in service of a running external tracker for super-performance people, teams, and organizations. We could start tracking this globally as a replacement for the trackers of employee engagement. Longitudinal as it is, I don't think the below is telling us much about how our movement toward better ways of working and organizing is doing:

I find this framework equally useful for assessing 👋 future-ready leadership competencies 👋, team performance, and organizational performance. I'll admit that the survey questions below are not perfect, but they're at least directionally correct; you could certainly build a good instrument on top of these ideas if you wanted to, but in the meantime I think they're useful at face value.

Building on that, the observable data points turn out to be, again, pretty useful for spotting where work needs to be done. These measures do tend to elevate organizations that are legally able to work in public, but part of me thinks that might be a bit of a cop-out, so I'm going with it. You could create your own internal version of this if you wanted to, using measures that you think are better proxies for or indicators of the base ideas.

- Purposeful: Ratio of purpose references to total paragraphs in the CEO letter, 10-K intro, and latest earnings-call transcripts

- Fit: Net Expansion Rate disclosed in investor materials (revenue growth from existing customers YoY)

- Vital: Count of employee-authored tech/design blog posts, conference talks, or open-source commits per 1,000 employees over the last 12 months

- Powerful: Median days from publicly reported bug to merged patch in company-owned GitHub repos (or disclosed engineering tracker)

- Fair & Just: Unadjusted median gender pay gap (%) published in ESG or pay-equity reports.

- Connected: Share of merged pull requests opened by external contributors (non-employees) on company public repos over the last 12 months

- Safe: Annual Glassdoor review percentage that contains negative safety keywords (“retaliation,” “can’t speak up,” etc.)

I tried this myself with five public companies that shall remain nameless; I had ChatGPT gather the data and then I used my expert assessment to normalize what the computer was able to gather into a legible score, with 10 points as the maximum and 1 point as the minimum. This does flatten some of the assessment, but sometimes you gotta make tradeoffs for the sake of a prototype.

"SaaS A" is a company that makes software for people who make software. This software‑for‑developers firm runs on radical openness: monthly releases, 25 % of code merged from outsiders, public salary formulas, and incident write‑ups within a day. Those practices push every score into the top decile, so it runs the table on the assessment. Great company to work for. Great product. Good for the world.

"SaaS B" is another toolmaker for teams. It continually reiterates its purpose and keeps net retention high, but external collaboration is limited and its gender‑pay gap is still large (though disclosed and tied to exec bonuses). Hence strong 8s and 9s on Purposeful, Fit, Vital, Powerful, but yet middling 5–7s on Connected, Fair & Just, Safe.

"Consumer Tech A" is a commerce platform known for shipping fast – the median pull‑request merges in five hours – but it recently cut DEI funding and has so‑so Glassdoor scores. That explains a perfect 10 on Powerful and solid 8s elsewhere, offset by a 3 on Fair & Just and 4 on Safe.

"Consumer Tech B" is a global streaming giant with the world’s lowest churn and a culture that prizes candor. Purpose references saturate its ESG report, pay equity is near parity, but it releases post‑mortems slowly. Thus 10s in Purposeful and Fit, strong 8s and 9s in most criteria, and a 7 in Connected.

"Financial Services" is a tech‑forward bank: clear social purpose, high customer NPS, and 90% “great place to work” scores. But regulatory friction slows code merges, diversity gaps persist in international teams, and incident transparency is expectedly thin. Result: high Purposeful and Safe (9s), fair mid‑table Fit and Vital (7s), but 4s in Fair & Just and Connected.

To me, this a) scans and b) is useful. I'm fairly certain it would work well with teams, too.

What next?

So, to the comments and maybe email responses here: Would you use this? Should we do something like this? Are these criteria working for you?

Comments