A new field experiment out of INSEAD and the University of Mannheim contains the cleanest causal evidence I've seen on what happens to collaboration when you give people AI tools at work, and it's an org design story.

What happened

Researchers ran a randomized controlled trial at a European technology services firm, with 316 employees across 42 teams. Half got access to a RAG-powered GenAI assistant grounded in the company's own knowledge base: CRM data, internal docs, meeting recordings, email history. The other half kept working as usual. Three months later, they measured what changed.

What they found

- People talked to each other more, not less. Employees with the AI tool gained significantly more collaboration ties (+7.77 degree centrality vs. +1.12 for control) and knowledge-sharing ties (+5.21 vs. +0.84). The AI made human interaction more worthwhile. Good.

- Specialists became knowledge magnets. Technical experts saw the biggest jump in being sought out for knowledge (In-Degree up 5.92 vs. 1.96 for generalists). The AI made deep expertise more valuable, not less; it helped people find and access the right expert faster.

- Generalists shipped more. Sales staff (the generalists) saw the biggest productivity gains, completing roughly 28% more projects. The AI handled enough coordination overhead that integrators could actually integrate.

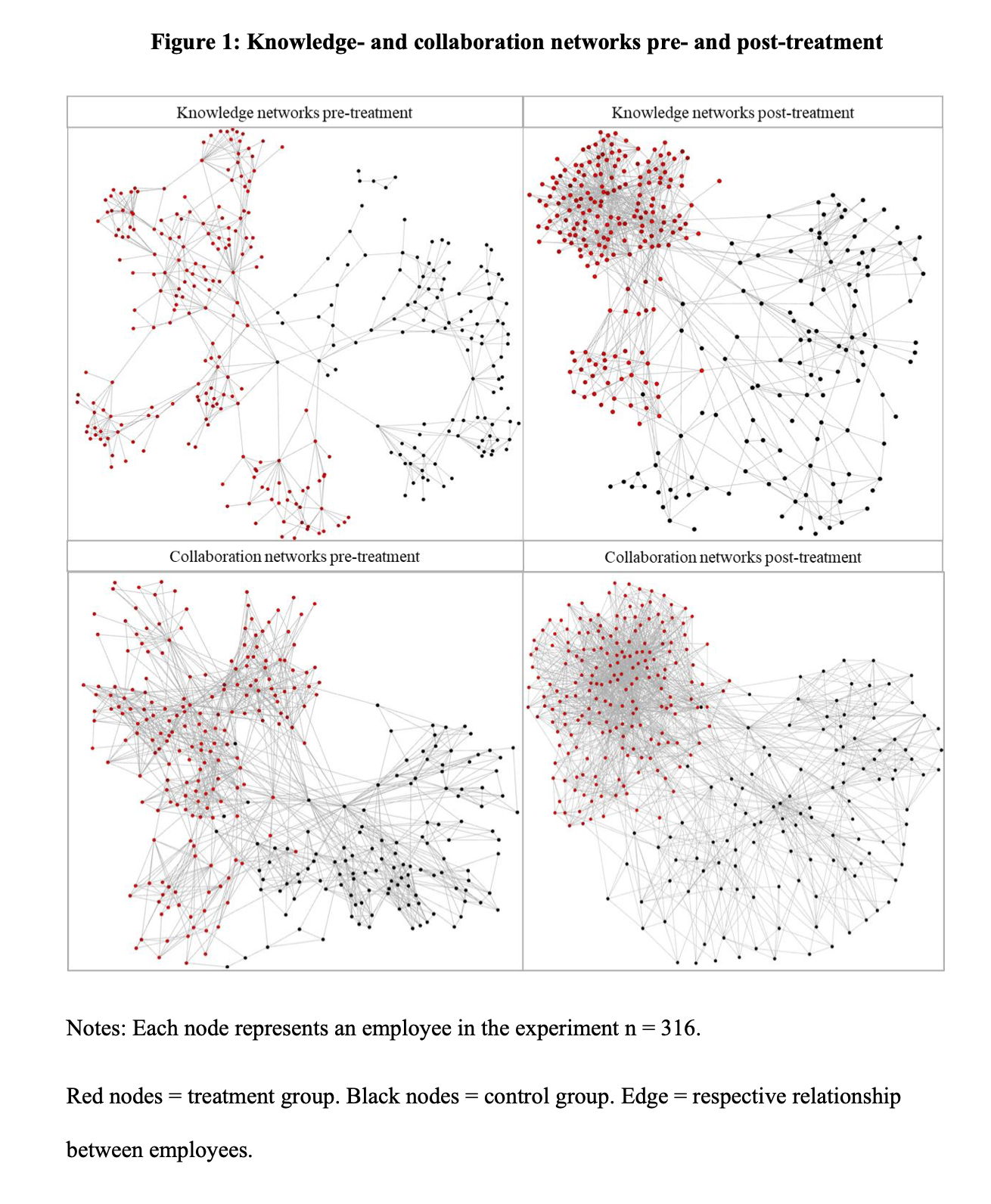

- The network itself changed shape. The before/after network visualizations are striking: treatment group nodes (red) go from scattered clusters to a dense, interconnected mesh. The org's informal structure literally rewired in three months.

The org design angle

Most of the AI-and-work discourse is stuck at the individual level: will AI make me faster? Will it take my job? This paper shifts the unit of analysis to the network, and the findings map directly onto patterns I think about constantly.

The first is Expanded Available Power. When coordination costs drop, more people can participate in more decisions and exchanges. Increasing intelligence (artificial and human) distributed capacity. Specialists who were previously bottlenecked by translation overhead suddenly became accessible. Generalists who spent their days chasing down context could focus on synthesis. Everyone's effective range expanded.

The second is Guilds, or more precisely, what guilds are trying to solve. The paper's "knowledge catalyst" finding is basically: AI can do what communities of practice are supposed to do by surfacing expertise and making it findable. Specialists' In-Degree centrality jumped because the AI made their knowledge legible to the rest of the org. That's the promise of cross-cutting knowledge networks, achieved through tooling rather than (or in addition to) structure.

And there's a Network of Teams implication here too. The researchers found that the increase in ties wasn't random: routine, low-value exchanges got replaced by targeted, high-value ones. The network got denser but also more purposeful. That's what good network design does: better connections where they matter is more valuable than just "more connections everywhere."

What's unresolved

A few things the paper can't answer yet. The study ran for three months; long enough to see effects, short enough that novelty could be doing some work. Does the organic rewiring persist, or do networks settle back? The researchers acknowledge this.

There's also the overload question. If specialists become knowledge magnets, do they eventually drown in requests? Does this mean that we actually need more specialists, or do the specialists need to build tools that extend their capacity? The paper found that knowledge In-Degree and project output were negatively correlated—the people everyone consults aren't the ones shipping the most projects. That tension doesn't go away with AI and it sounds like it might get worse.

And the sample is a single firm in Central Europe, with self-reported network data. Strong design, genuine randomization, but one context. The mechanism for this experiment is plausible and well-theorized, but we need more replications before treating it as settled.

What to watch

- Network effects over time. Whether the increased connectivity persists past the novelty phase, or whether organizations need to actively maintain the conditions that produced it.

- Specialist overload. Whether knowledge magnets burn out when the whole org can suddenly find them. The negative correlation between being consulted and shipping projects is a strong signal.

- Grounded vs. generic AI. This study used a RAG system embedded in firm-specific knowledge. Generic ChatGPT, Claude, or even Glean, aren't likely to produce the same network effects. Watch whether the context is doing most of the work (I suspect that it is).

- Structural response. Whether organizations that see these network shifts redesign in response—smaller teams, fewer coordination roles, new knowledge-sharing rituals—or just pocket the productivity gains and move on.